There are now many reviews of film-developer combinations on the internet. Most of them are quite subjective and hardly worth the reading time. The often used format is simple: use a film-developer combination and take a range of pictures of different subjects under different conditions. Then use the eye to look at the results and use the emotion to guide you.

This is not the way to present information about film-developer combinations that a reader an use. The classical approach is also quite simple: take pictures of a grey card with a range of exposures to create a series of negatives with different densities and use a densitometer to measure this density. Then use a test chart to measure the resolution and sharpness under a microscope with sufficient enlargement.

This is what I did:

Use one film, the best on the market (Ilford 100Delta), a grey card and the Tirion test chart.

On my camera I had a lens of excellent quality, stopped down to f/5.6. The first remark is that even with this aperture, you need to be careful to get the best results. The microscope had enlargements of 40x and 100x. The maximum common enlargement is 15x to 20x.

The developers used in this test are:

1. FX39-II: the classical high acutance developer, formulated by the late Geoffrey Crawley and now made by Adox. This is one of the best developers on the market.

2. Adonal: also made by Adox and a reformulation of the classical Rodinal.

3. Pyro 48: made by Moersch Chemie, a new version of the classical pyro developer

4. Super Grain: a new version of the AM74.

5. The Df96 monobath, made by Cinestill. This is an interesting developer, because it combines developer, and fixer solutions in one. You need only one development time for all films. The classic formula has very fine grain but less sharpness. It is interesting to see how the modern remake functions.

The film was exposed at the nominal speed (ISO100), the light was measured with the Sekonic Speedmaster with the incident method. camera was Leica M7 with Summilux-M 1.4/50 ASPH.

The development data are:

1. FX39-II: dilution: 1+9; temperature 20 degrees; 7 minutes; continuous: first 30 seconds, then 2x per minute

2. Adonal: dilution: 1+25; temperature 20 degrees; 9 minutes; continuous: first 1 minute, then 30 seconds per minute

3. Pyro 48: dilution:2.5 + 5 + 250; temperature 20 degrees; 16 minutes; continuous: first 1 minute, then 2x per minute

4. Supergrain: dilution: 1+9; temperature 20 degrees; 6 minutes; continuous: first30 seconds, then 2x per 30 seconds

5. Df96: stock solution; temperature 22 degrees; 6 +4 minutes (to clear the negatives); moderate agitation: 2x per minute

R

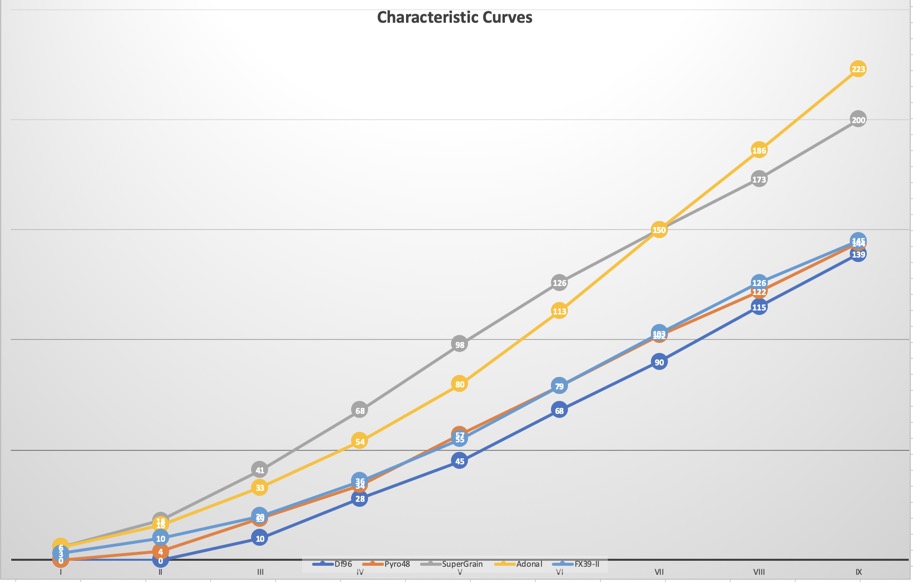

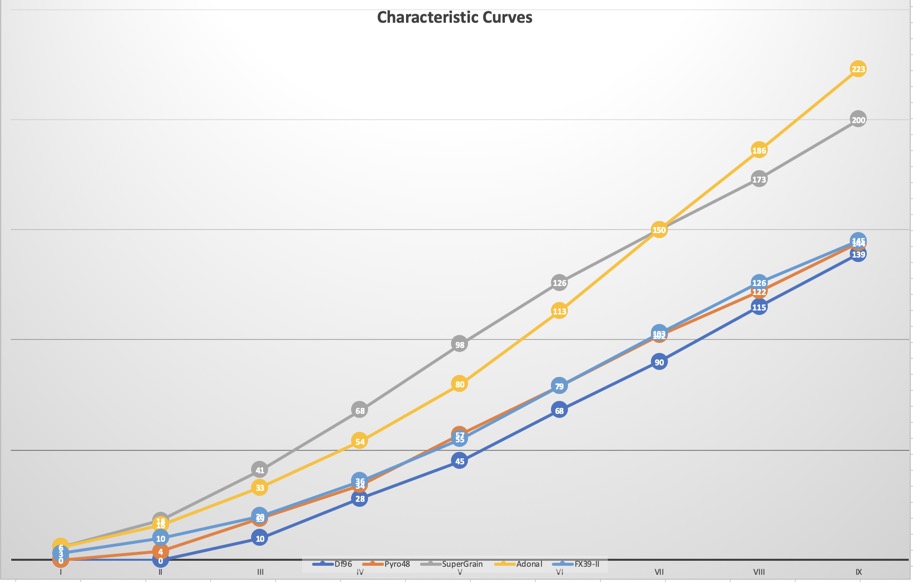

esults: tonal range.The density range gives information about the effective speed, the maximum useable density for the highlights and the steepness of the curve says something about the subtleties of the tonal differences: a steep curve is an indication that the mid tones are very well separated and a less steep curve tells you that the tonal differences are well recorded but more difficult to observe.

The graph below gives all the details.

There are in fact three groups: The first group comprises Adonal and SuperGrain: The tonal differences in the extreme highlights will be difficult or impossible to print, but the shadow areas are very well recorded with good local contrast. In practice the speed of the film is fully exploited, but the dilutions could be higher (Adonal: 1+50/100 and SG 1+15 of even 1+20) with proportional increase of the development time. A reduction of development time is advisable to reduce the densities of the highlights. Some experimentation is a rewarding exercise!

The second group combines the FX39-II and the Pyro 48. Both developers score very well on tonal range and highlight density, producing subtle tonal shades with high overall contrast. Nine stops is a good score that matches the claimed tonal range of most digital cameras. For best shadow recordings, the speed should be reduced by one third (FX39) or even half a stop (Pyro).

The third group is populated by only one developer: the monobath developer by Cinestill. It has a very convenient processing cycle: no stop bath and no fixer. The high lights are well recorded, and matches the second group. Disappointing is the steep drop in the shadow area. After two stops of underexposure there is nothing to record. Deep shadows will be completely black without any trace of subject contours.The solution to reduce speed will help and the instruction leaflet says that pull processing is possible. My recommendation: set ISO speed to 50 and use the 6 minute development time. This developer is best used when deep shadows are absent in the scene.

The score (speed and tonal range) is

1 FX39-II

2 Pyro 48

3 SuperGrain

4 Adonal

5 Df96

Note that the numbers 3 and 4 could get a better score after some experimentation with speed setting and development time.

Results: grain and definition

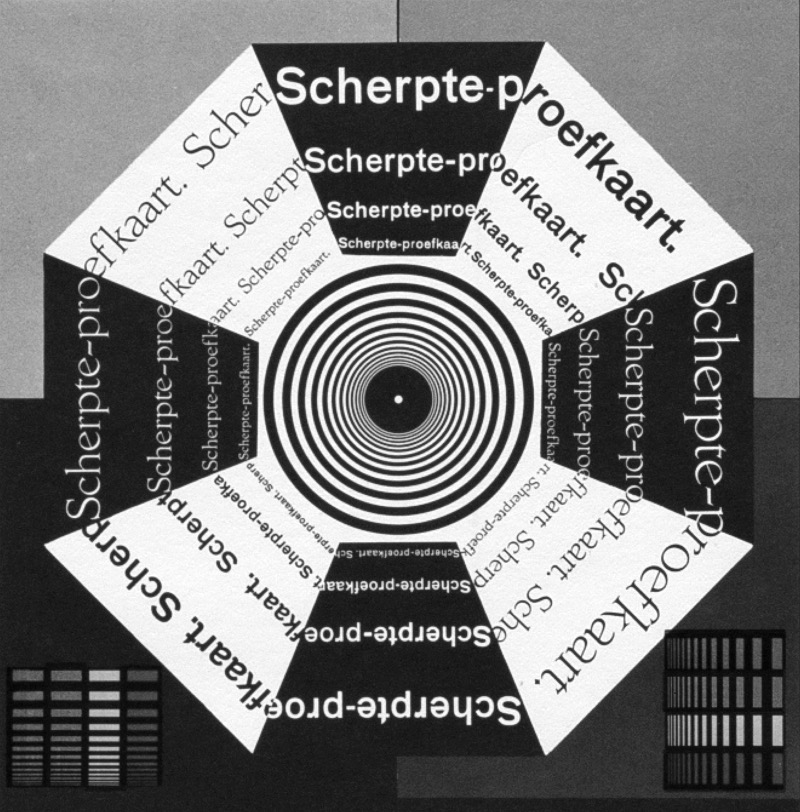

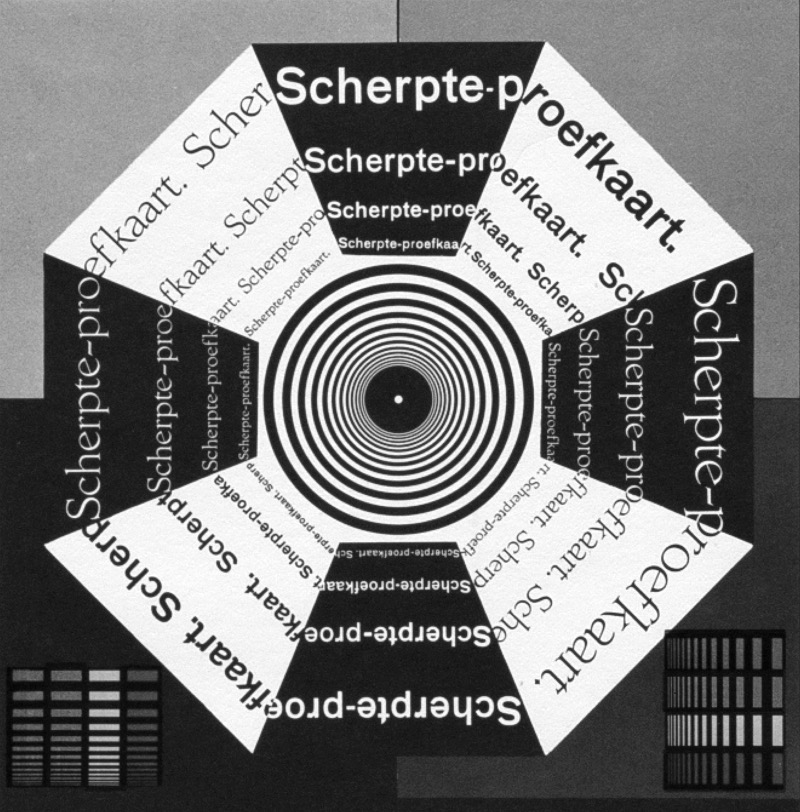

The test chart has a number of intriguing details: it shows fine print in several sizes and printing is white on black and black on white. The chart is arranged as a range of pie charts, numbered 1 to 8. (1 is op top). The white on black print is more difficult to observe because the large area of black grains will sill over into the thin white lines of the fine print.

The grain is quite pronounced with Adonal (as expected) and hardly visible with Df96 (not expected, but assumed because of the large amount of sulphite). Between these two, the grain pattern is similar for the three others. The score is:

1 Df96

2 Pyro 48

3 FX39-II

4 SuperGrain

5 Adonal

The fine print that is just readable is the limit for the resolution:

here the score is

1 FX39-II

2 Pyro 48

3 Df96

4 SuperGrain

5 Adonal

General conclusionOverall there is not much to choose between the five developers. It is also a tribute for the Ilford emulsion. There is a old statement that says that the main characteristics are fixed by the emulsion and all that the developer can do is adjust the balance between grain, tonal range and definition a bit. This test indicates the truth of this statement. There are more considerations today to look at: Adonal is quite flexible and has a long shelf life. It produces excellent sharpness with a pronounced grain, but can be used with every film and te shape of the curve can be influenced to a high degree. It is also very cheap.

The Df96 is also quite flexible, can be used with all films and has quite simple instructions, a long shelf life, but one litre is limited to 16 rolls of 135 film. The shadow recording is non existent, but when this is not a problem, the developer is very easy to use and you need no fixing solution.

The SuperGrain functions like an improved version of Rodinal: it gives very sharp results with moderate grain and a very fine tonal range. Most films require only one development time and one dilution.

The Pyro and FX39 are the best for the recording of extremely fine detail. Grain is fine and tonal range is well within the grade 2 of the print range in Splitgrade/Multigrade. The FX39 gives very clean negatives, where the Pyro has its staining effect. The only problem with Pyro is its restricted range of films that match this developer.

My choice then is for this Ilford film: FX39-II. It has excellent definition, fine gran and a long tonal range with good shadow details and subtle highlights.

Note: with the exception of the characteristic curves, all results were observed under the microscope with 40x enlargement. Scatter in the enlarger will reduce the final results and then Adonal and SuperGrain, because of the specific grain size and distribution may hold details to a larger degree. In fact, you can not make a wrong choice with any of these developers. Fine tuning the exposure method in combination with experiments with the development times, temperature and agitation method will improve the results. Any person has its own requirements and visual standards, but the results presented here should provide a good starting point.